About

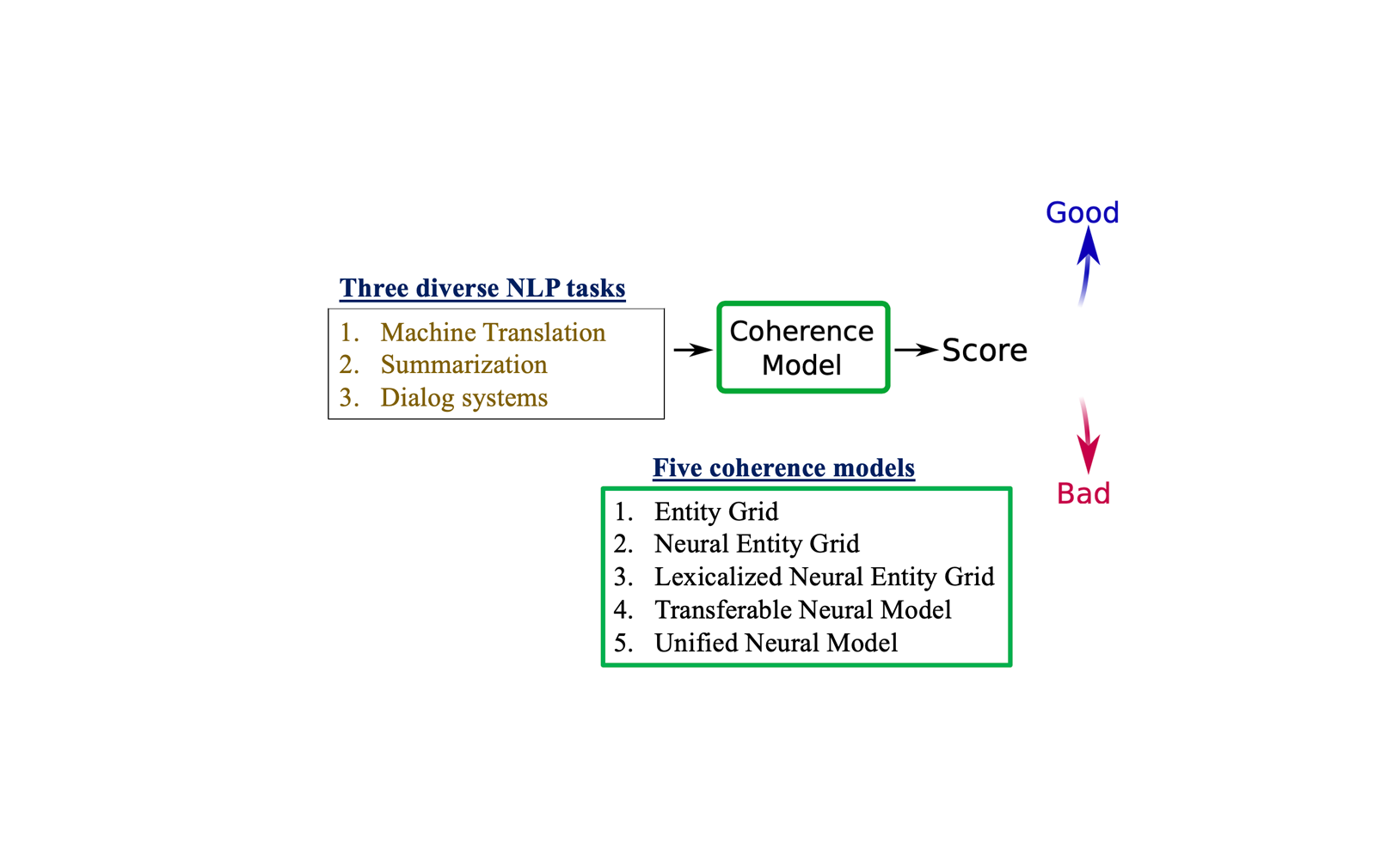

This resource contains the information regarding code and data used for evaluation in “Rethinking Coherence Modeling: Synthetic vs. Downstream Tasks” paper (EACL 2021).

Source code of evaluated coherence models

We benchmark the performance of five coherence models. For each of the coherence models, we conducted experiments with publicly available codes from the respective authors. Links are given below:

- Entity Grid

- Neural Entity Grid

- Lexicalized Neural Entity Grid

- Transferable Neural Model

- Unified Neural Model

Datasets

Machine Translation Evaluation Dataset: We use the reference and the system translations provided by WMT2017-2018 as our test data, under the assumption that the reference translations are more coherent than the system translations. This results in a testset of 20,680 reference-system translation document-pairs.

Abstractive Summarization Evaluation Dataset: We use the CNN/DM dataset for this task. We collect the reference summaries from the CNN/DM testset as well as the summaries generated by the four representative abstractive summarization systems: Pointer-Generator, BertSumExtAbs(BSEA), UniLM, and SENECA.

Extractive Summarization Evaluation Dataset: The dataset from Barzilay and Lapata(2008) provides 16 sets of summaries where each set corresponds to a multi-document cluster and contains summaries generated by 5 systems and 1 human.

Next Utterance Ranking Evaluation Dataset: We evaluated the coherence models on both datasets of the DSTC8 response selection track, i.e., the Advising and Ubuntu datasets. The former contains two-party dialogs that simulate a discussion between a student and an academic advisor, while the latter consists of multi-party conversations extracted from the Ubuntu IRC channel.

The datasets can be found in this LINK

Citation

Please cite our paper if you found the resources in this repository useful.

@inproceedings{mohiuddin-etal-2021-rethinking,

title={Rethinking Coherence Modeling: Synthetic vs. Downstream Tasks},

author={Tasnim Mohiuddin and Prathyusha Jwalapuram and Xiang Lin and Shafiq Joty},

year={2021},,

booktitle = "Proceedings of the 16th Annual Meeting of the European chapter of the Association for Computational Linguistics (EACL) 2021",

}

Licence

MIT licence.