About

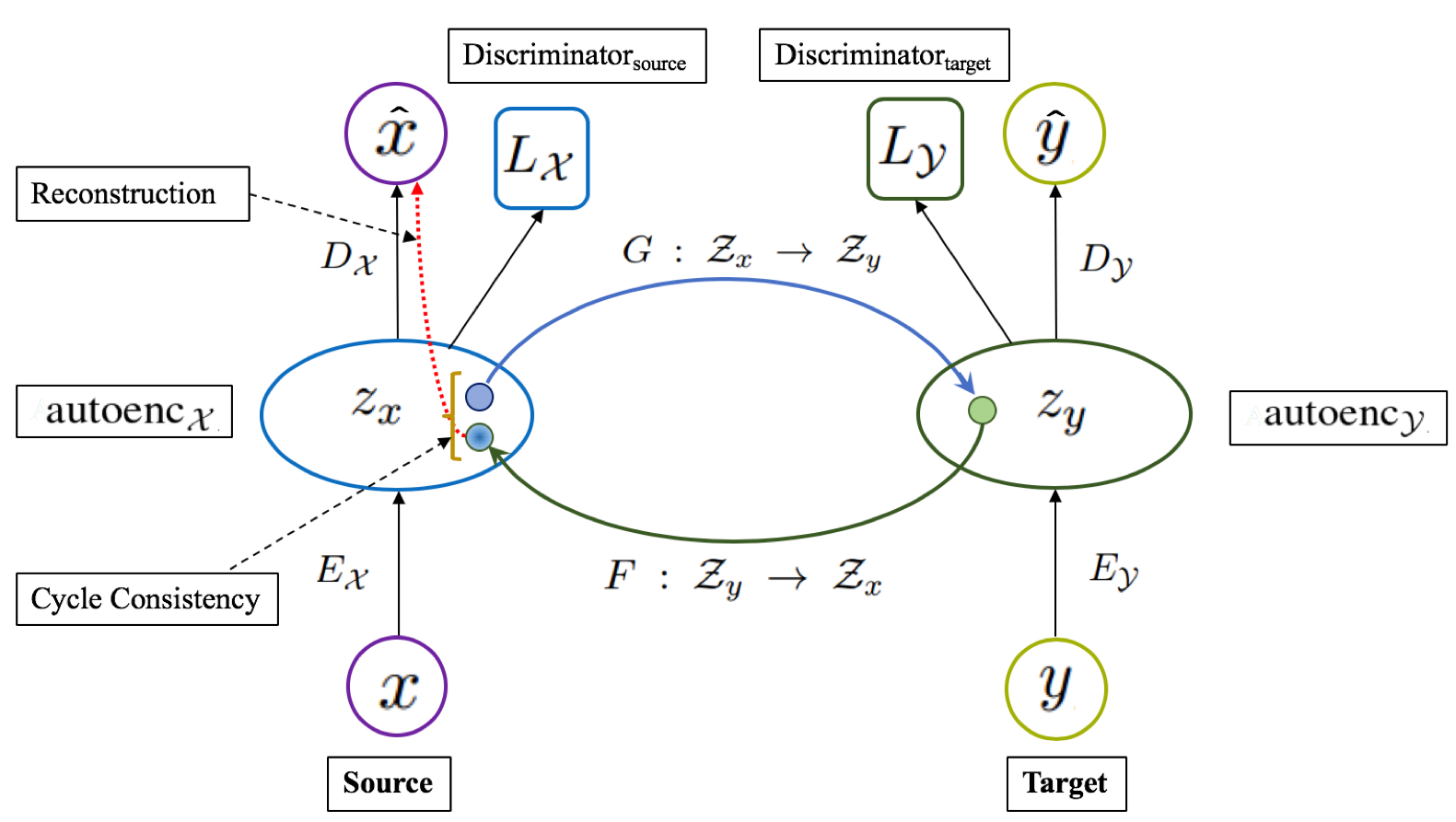

This resource contains the source code of our NAACL-HLT 2019 paper entitled Revisiting Adversarial Autoencoder for Unsupervised Word Translation with Cycle Consistency and Improved Training.

Source code

Datasets

- Conneau et al. (2018) Dataset: Consists of FastText monolingual embeddings of 300 dimensions trained on Wikipedia monolingual corpus and gold dictionaries for 110 language pairs.

- Dinu-Artexe dataset: Consists of monolingual embeddings of 300 dimension for English, Italian and Spanish. English and Italian embeddings were trained on WacKy corpora using CBOW, while the Spanish embeddings were trained on WMT News Crawl.

Citation

@InProceedings{mohiuddin-joty-naacl-19,

title="{Revisiting Adversarial Autoencoder for Unsupervised Word Translation with Cycle Consistency and Improved Training}",

author={Tasnim Mohiuddin and Shafiq Joty},

booktitle = {Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies},

series ={NAACL-HLT'19},

publisher={Association for Computational Linguistics},

address = {Minneapolis, USA},

pages={xx--xx},

url = {},

year={2019}

}

Licence

Creative Commons Public Licenses.